Continuous Cross-Platform Deployment with GitHub Actions

Today’s blog post will be something rather technical not focusing on writing strategies, Zettelkasten insights, or how much carbon dioxide a server produces. There are two major reasons for both why this blog post exists and why especially now. The first reason has to do with the never-ending problems I’ve experienced building Zettlr for multiple platforms, and the second is that a very good solution just arrived.

When developing a cross-platform application that should run on Windows, macOS, and ideally (most) Linux distributions, you face one major problem: How to build it for all platforms at once? While there are some cross-platform compilers, they don’t necessarily work well when run on platforms that don’t match the target platform. To a lesser extent, Electron, upon which Zettlr is built, has begun experiencing similar problems: besides some native node-modules which need to be compiled for the target platforms (something Zettlr as of now does not use), the main problem for me arose when Apple dropped 32 bit support on macOS Catalina. And, well, I updated, because I’m one of these “always update”-folks. And this meant: No more WINE. While the WINE developers already work on porting it to 64 bit, this situation highlighted once more that you should always try to build using the platforms you are targeting. That is: If you build for a Windows target, use a Windows machine. And if you build for Unix, use a Linux-distribution. Long story short: there are a lot of reasons why even an Electron app should be built on separate machines.

The second reason for this blog post is that a possible solution for the problem had just been launched by GitHub, where Zettlr’s source code is hosted: GitHub Actions. And to the best of my knowledge, there is no good tutorial showing how to perform cross-platform releases with GitHub Actions as of now. In short, GitHub Actions are a CI/CD service, where CI stands for continuous integration and CD for continuous deployment. Both are pretty much engineering buzzwords floating around similar to “agile,” UX or UI. In this blog post I want to explain the almost 300 lines of pure YAML configuration that resulted from a day of implementing continuous deployment for Zettlr. While I will stick to what I did and what problems I faced, I will also try to generalise the findings I had, so that even non-electron apps will hopefully benefit from what I have to say!

So let’s go!

Preparations

This blog post relies upon some things it expects that you have already done:

- You have some cross-platform application at hand

- You can build the application successfully on your machine, at least for the your own operating system

- You have some command line script (or multiple) that you can type in the terminal to build the application (for Electron, something along the lines of

npm run build, otherwise something likemake && make install)

Quick Recap: CI/CD

Just so that we are on the same page: continuous integration refers to some automatic actions that are performed as soon as certain events occur. These actions run unit tests and make sure that the proposed changes (for instance through pull requests) do not break anything that worked before. Continuous deployment, on the other hand, refers to actions that deploy whatever you are building. This could be a page (for instance, they can trigger a full Jekyll rebuild and upload it to your server; Zettlr’s documentation actually uses this), or an app, which you’d like to release. The latter applies to Zettlr.

For a long time, I was sceptical towards CI and CD, because it makes use of foreign servers, and some black magic under the hood. And a lot of stuff is necessary to set it up. I previously looked at TravisCI, as it’s one of the most popular services, but I found the documentation always difficult to understand, because it obviously does not replace an introductory course in “CI 101.” But then GitHub Actions came around the corner shortly before Catalina rolled out, so it was an opportunity I just had to use.

The main difficulty when implementing CI/CD for a repository on GitHub is to exactly know what you would like to achieve, and to understand the logic behind the configuration of the service you choose. Albeit I have not tested it, I would say, whether you use TravisCI, GitHub Actions, or something else is only a matter of taste. They surely work somewhat differently, so you should think before you choose, as you probably will have to stick with the provider you choose for a long time.

Primarily, the benefits of CI/CD go into the direction of “automate the boring stuff.” The main benefit surely is that a lot of work related to developing can be done automatically without you having to always perform the same cumbersome tasks. Additionally, this automation can also happen in parallel on multiple computers. Sounds like a huge relief, especially for Open Source developers coding in their free time, right?

Introducing GitHub Actions

GitHub Actions have launched in 2019 after Microsoft acquired the company. The so far biggest benefit the GitHub community reaped from this acquisition is that GitHub suddenly got access to the vast Microsoft Azure network — basically Amazon Web Services (AWS) in blue. The primary argument for GitHub Actions over TravisCI or competitors is surely that GitHub Actions are built into the repositories, so a lot of stuff is working out of the box: the continuous integration service of the same company hosting your repository will likely work best as they know their own system inside-out. (Well, at least this is what I hope. I’m looking at you, Windows!)

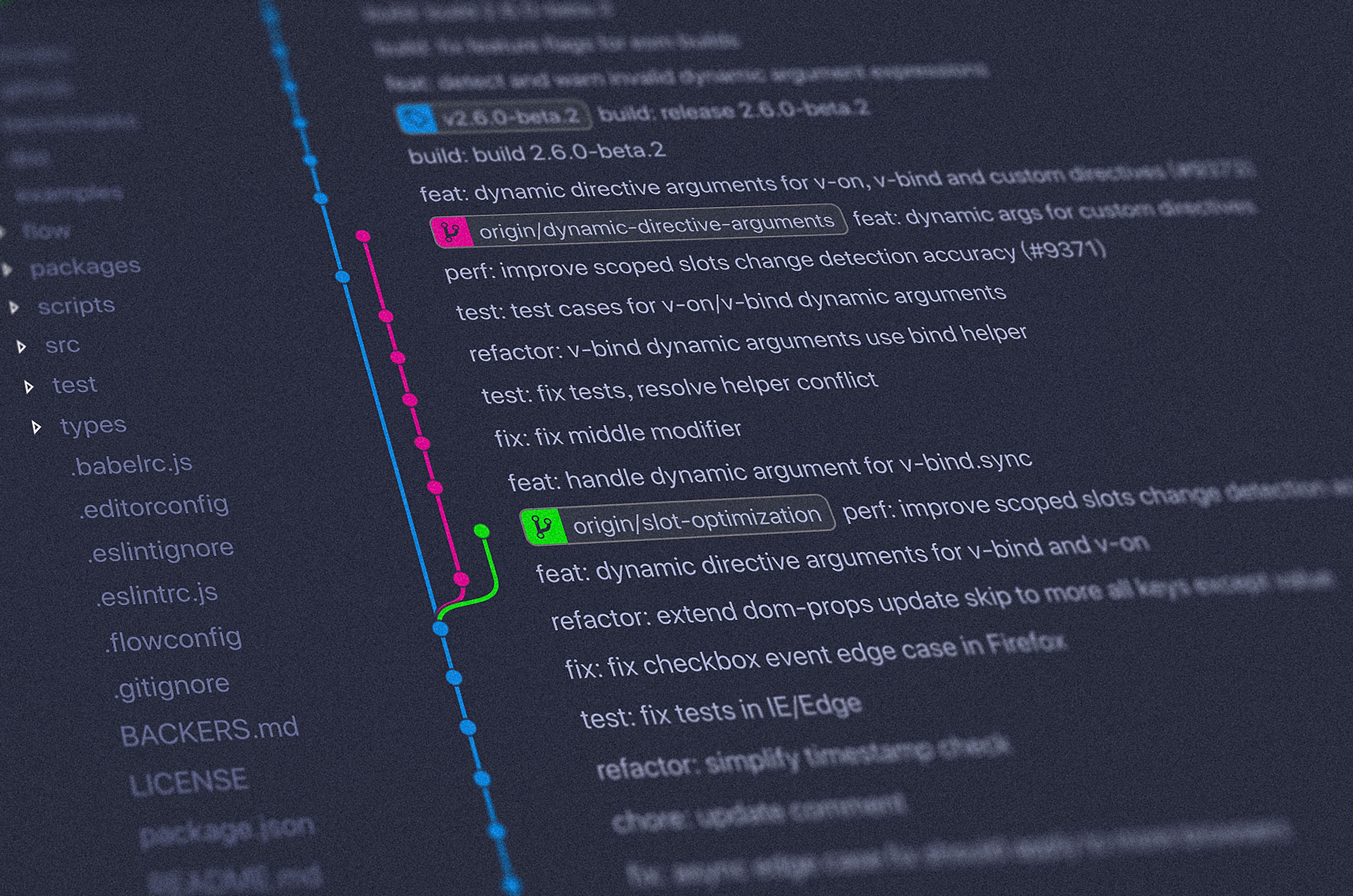

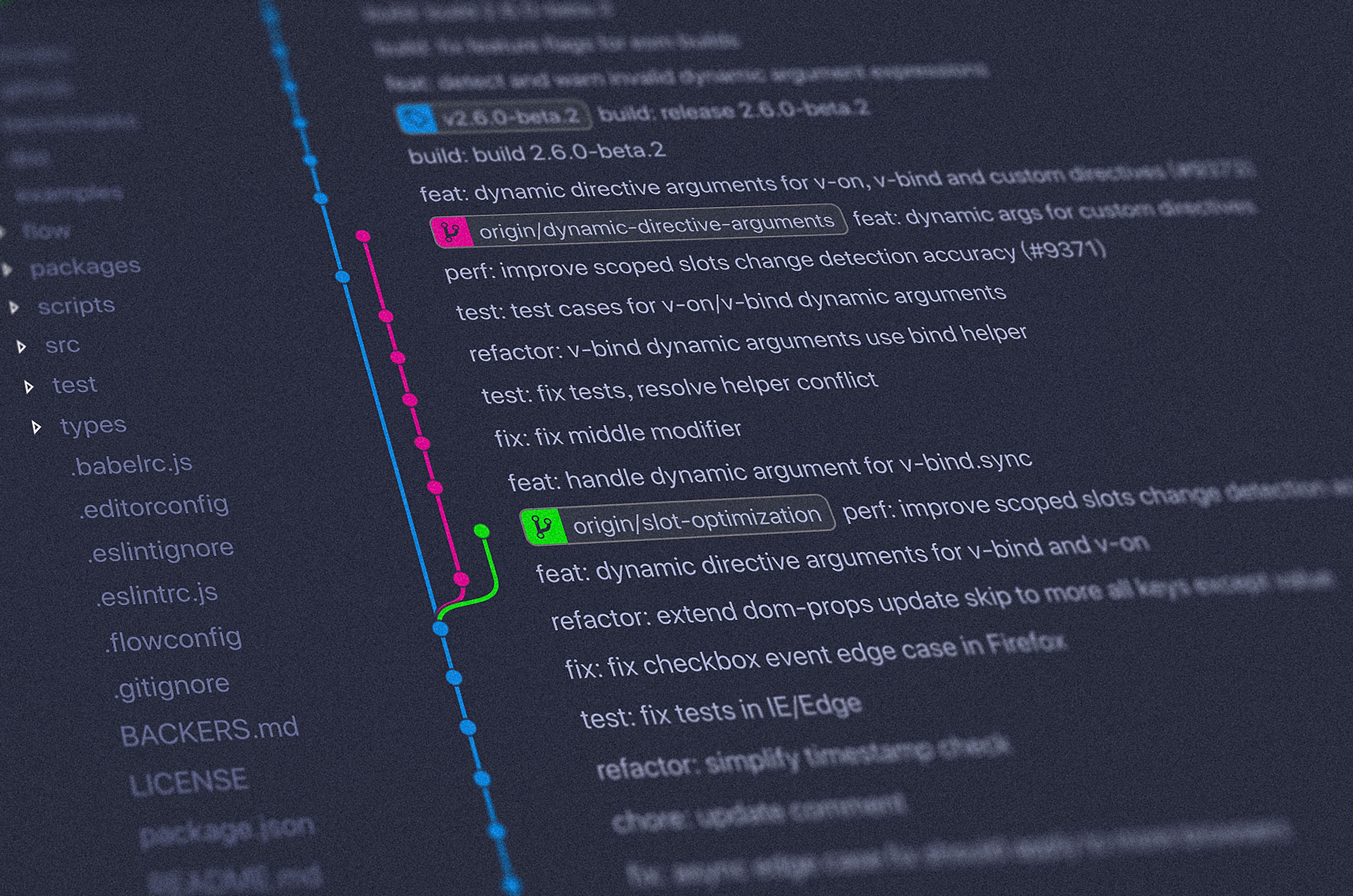

GitHub Actions are on a per-repository basis. The special directory .github is being used to configure the repository with, e.g., custom templates, and GitHub Actions, or rather, custom workflows, reside in .github/workflows. Every YAML-file you put into that directory and push to master will be automatically parsed by GitHub and run according to your rules. And everything that one needs for both continuous integration and deployment is already included: compiling, storing the builds, and creating releases.

On the Logic of GitHub Actions

Before finally analysing Zettlr’s release cycle configuration, I would like to say something to the broader logic employed by GitHub Actions, because this is something that not only prevented me from using TravisCI in the first place, but also kept me struggling with GitHub Actions: The core concept of these platforms is not always explained straight forward.

There are three conceptual levels with GitHub Actions. The top-most are workflows. Each YAML-file inside your .github/workflows-directory constitutes one workflow. Per event, each workflow can run only once. Workflows can be distinguished on the Actions-tab of your repository. Each workflow can contain several so-called jobs. By default, GitHub will try to run all of these jobs concurrently, that means: If there are five jobs in a workflow, they will all be run in parallel (or queued, if you exhaust the limit of 20 parallel jobs). If one job within a workflow depends upon the success of another job, you can tell GitHub that the second needs: "the first". Each job then is divided into several steps that are always run in sequence.

Every job and every step can be made conditional, that is: the job/step will only run if the provided condition is satisfied. This is especially useful for fitting matrix-jobs to specifics of the current operating system (so that certain jobs or steps only run on Windows, but not on macOS or Linux).

But wait: Matrix jobs? That is right. Imagine a matrix as a (nested) for-loop. Let’s say you want to build on Windows, Linux, and macOS: Then your matrix (actually more of a vector) will run the same job three times, and always pass the current operating system as a variable that you can use to determine which steps to run within the job. Only jobs can be run as a matrix. If you specify multiple variables, you get a real matrix. For instance, let’s say you want to build on all three operating systems three times. You could then also specify, e.g., the variable iterator and set it to an array [1, 2, 3]. Then you’d have nine concurrent jobs running in parallel! And also waste a lot of resources! Climate change, yay!

In general, you will only need a few workflows (Zettlr, currently, has two: one for testing on every pull request, and one for building as soon as develop is being merged into the master branch). Within most workflows, one job will be enough (during testing, the workflow has one job: to run the unit tests). The release cycle for Zettlr contains two jobs, because one builds the assets, and the other publishes them (I’ll explain why in a minute). But what you will certainly have a lot is steps.

Here’s a good rule of thumb that has helped me a lot in finally grasping the concept of CI. First, think of what you currently do, and what you would like to automate.

- A workflow is something from A to Z. For instance, every time you modified the code you will head into the terminal and run the tests, which sometimes means: several commands in order.

- A job is a series of steps that produces one single result.

- A step is literally one line of console input, such as

cd ..orls -al.

Meta-Concepts and Caveats

Two very important things relate to the meta level of what GitHub Actions are meant to do. The first is: Every single job (not workflow!) is run on its own dedicated virtual machine. That means: There is no way to exchange any data between jobs. Each job is completely standalone. The second: Each workflow run has a small place to store artefacts. And these are what you can make use of to transfer data between jobs. Each job can contain steps that simply “upload” files from a job to this artefact storage, and each job can contain steps to download artefacts from that very storage. Theoretically, you could populate an SQLite database or an Excel sheet with information generated in one job and upload it, and download that database to another job and read the information. But please: don’t do that.

Building a Complete Workflow

Now after the long introduction which hopefully makes some points clear, let’s dive into the YAML file Zettlr uses for its release cycle! At the time of writing, almost 300 lines of beauty drive that monstrosity (link to the file). Let us start from the beginning: The workflow should do the following:

- Compile all assets (templates, stylesheets, the Vue-components)

- Produce an installer for a each platform

- Generate SHA256 checksums

- Create a release draft and upload all assets to it

Defining When to Run the Workflow

First, we tell GitHub that it should only trigger the Workflow when something is being pushed onto the master branch. This is because I always leave master alone, and only push when I want to release. Furthermore, I want to tag as close as possible to the release, that is: when I publish the release. For other projects, a tagging-event might be more suitable, but this was the easiest solution for me:

on:

push:

branches:

- master

As you can see: There’s a lot of object-nesting happening, so if you are used to the weird configurations of some JavaScript modules, you’ll really feel at home. Every time any event corresponds to whatever you have defined in on, this file will be run. You can even get crazy and run a Workflow using a cronjob every five minutes.

Next, we have to think about jobs. You have to remember: As we need three operating systems, we will need three jobs. Instead of writing three separate jobs, we’ll be using matrices. As explained above, a matrix basically duplicates a job and passes the values you’ve defined.

Creating One Job for Three Platforms

So first let us create the job build in the jobs-object. (Maybe this helps see the structure: My workflow only has three properties: Its name, on, and jobs.) Also, let’s define the strategy. I have no idea why it is necessary to define strategy, as matrix is currently the only value it accepts, but maybe other strategies are planned.

strategy:

matrix:

os: [ubuntu-latest, macos-latest, windows-latest]

This tells GitHub to run this job build three times, and pass one of the values in os to each instance. Within each job you can access the values with matrix.os. You could do the following:

strategy:

matrix:

os: [ubuntu-latest, macos-latest, windows-latest]

iterator: [1, 2, 3]

This would spawn the job nine times, with matrix.os and matrix.iterator being assigned the value tuples (ubuntu, 1), (ubuntu, 2), (ubuntu, 3), (macos, 1), (macos, 2), (macos, 3), (windows, 1), (windows, 2), (windows, 3). Note that the matrix does not tell GitHub that it should run on different operating systems! It simply spawns the jobs with these values. You could rename os to foobar!

Next, at the beginning of each job, a new VM is started, which is shut down after the job completes. That means we have to tell GitHub which virtual machine the job should run on:

runs-on: ${{ matrix.os }}

As you can see, GitHub uses some mixture of moustache and JavaScript template-string syntax to access variables. To make the point more clear: you could also do the following:

runs-on: ${{ matrix.iterator }}

But then GitHub would bark at you that “1” is not a valid operating system.

Defining What a Job Should do

So, now we have our three virtual machines waiting for something to do. So think again: What do we need to do to create a release? Well, let us have a look at the make-script I’ve been using for the last year. It always runs the following tasks:

- Retrieve the current version from

package.json(to name the artefacts) - Build the stylesheets

- Build the templates

- Pre-build the revealJS-library

- Build the Vue-components

- Download the default language files

- Build the Windows release

- Build the macOS release

- Build the Debian and Fedora release

- Build the AppImage releases

- Generate the checksums for all artefacts

- Upload everything and draft a new release

I have emphasised the two steps for which we need a different operating system. The rest can run on every platform. But, as each VM is initially empty, we also have to run the steps 1 through 5 for each platform. In the end, we will need three VMs, each one running steps 1 through 5, and then, depending on the platform, step 7, 8, or 9 and 10. 9 and 10 can be combined as these are all Linux targets. I left step 6 out in the Workflow because I hope to always remember to keep the languages up to date.

The most difficult logical step for me was number 11. You should not generate the checksums as soon as the files are generated, because then you would have to upload one checksum-file per binary. But I want all of them in one file. And this in the end prevented me from doing something stupid with the workflow, as I originally intended to create a new release draft beforehand and then upload the assets directly to that. This would’ve been pretty complicated. So let’s continue: Our duplicatable job needs some steps. These will be run one after another and are defined as an array steps. Again, for clarification: this job only has three properties strategy, runs-on, and steps.

Setting up the Build Environment

- uses: actions/checkout@v2

The first step is to git clone https://github.com/Zettlr/Zettlr.git, which is done by the default-action “checkout,” which I import here. As you can see, it’s been designed with default repositories in mind: By default, it will clone only the most recent commit (as we do not need the full history!) of the master-branch. Which is precisely what we want to use. You can, of course, customise what this action copies onto the VM. Or run git clone manually ¯\_(ツ)_/¯.

- name: Setup NodeJS 12.x

uses: actions/setup-node@v1

with:

node-version: '12.x'

This simply copies over a pre-existing archive containing the current 12.x-release of NodeJS, which already resides on the image file for faster access (setting up NodeJS takes around 10 seconds max). The name is simply something that can be used to make the console output prettier when the step runs, but you can omit it, if you don’t care. Important: I realised that lts for the long-time support release did not work, which is why I defaulted to 12.x.

In general: Each action can receive inputs which are specified in the with-variable. Think of the with-object as an inverted Python statement (with something do:). What inputs are possible is always defined in the README of the specified action.

- name: Manually create the handlebars-directory (Windows only)

if: matrix.os == 'windows-latest'

run: New-Item -Path "./source/common/assets" -Name "handlebars" -ItemType "directory"

Here you have one more example for why I hate Windows. When I first set up the workflow, the Windows-build failed, because the node-process compiling the templates crashed as soon as it tried to create the handlebars-directory. I have no clue why, but creating the directory up-front worked somehow. (All other directories, interestingly, are created without any error). Notice the ridiculous PowerShell equivalent for mkdir ./source/common/assets/handlebars. Additionally, here you can see the if in action.

Now we turn to setting up the environment. For me, in the beginning, it was really hard to get it into my mind that I have to imagine each VM as a new user that has to completely set up everything because the Zettlr-directory does not yet exist on her computer. For me, the directory is baked into my computer, and I actually had a small panic attack when I bought my new computer and the directory was missing.

This is where steps 1 through 5 are defined. I was lazy and did not split them up into separate steps, because the testing workflow is meant for analysing what fails. When building for release, everything should work fine already.

- name: Set up build environment and compile the assets

run: |

npm install

cd ./source

npm install

cd ..

npm run less

npm run handlebars

npm run reveal:build

npm run wp:prod

Pretty boring stuff, but afterwards we will have all node_modules and all assets compiled and ready for shipping!

Provide Custom Variables from your Scripts

- name: Emit pkgver

id: pkg

run: |

pkgver=$(node ./scripts/get-pkg-version.js)

echo ::set-output name=version::$pkgver

shell: bash

This step runs the get-pkg-version.js-script from the repository’s scripts-folder with node. It saves the console output from the script into the pkgver-variable and then performs magic: If you echo something out in GitHub actions with the magic phrase ::set-output, you can define a variable that will be available to all steps running afterwards! Isn’t that cool? The syntax is pretty straightforward:

echo ::set-output name=<your variable name>::<the value>

Afterwards the output (you can generate multiple variables) will be available in other steps through the object steps.<your step id>.outputs.<your variable name>. In this case: steps.pkg.outputs.version.

One thing I’d like to say here: With the property shell: bash you can determine which shell you would like to run the value of run with. The default for Windows is PowerShell, but in this case we want bash, even on Windows, because otherwise we would need to write two steps, one for Bash (macOS and Linux) and one for PowerShell. (The PowerShell-code I have used above is only because this will only run on Windows and I want to show how bad Windows is.)

The following steps then apply if the matrix.os is the right one:

- name: Build Windows NSIS installer

if: matrix.os == 'windows-latest'

run: npm run release:win

For each value of matrix.os the corresponding package.json-script is called, that is, either release:win, release:mac, or release:linux and release:app-image. Then, each of the six resulting files is being uploaded, and afterwards, the corresponding VM is shut down again.

Secrets and Environment Variables

At this point, I’d like to highlight the macOS build step:

- name: Build macOS DMG file

if: matrix.os == 'macos-latest' # Only if the job runs on macOS

run: APPLE_ID=${{ secrets.APPLE_ID }} APPLE_ID_PASS=${{ secrets.APPLE_ID_PASS }} CSC_LINK=${{ secrets.MACOS_CERT }} CSC_KEY_PASSWORD=${{ secrets.MACOS_CERT_PASS }} npm run release:mac

What you can see here is that I make use of four secrets. When building Electron apps, you need to sign the resulting binary, and for macOS, also “notarize” the app, that is: upload it to Apple’s servers and have them verify that you didn’t just create a virus. Luckily, electron-builder handles both tasks for us, but we have to provide it with two sets of credentials. The Apple ID and the Apple ID pass(word) are my account infos that the notarizing step needs to upload the file to Apple. The cert-variables are my base64-encoded developer certificate and the password with which it is encrypted. Obviously, I should not add these things to git, but the actions need them. That’s where the secrets-object comes into play. It is an object that is available everywhere and can be used by steps to pull in necessary information.

GitHub stores them secretly (for your repository, go to Settings → Secrets to manage them), and in any console output, they’ll be replaced with three dots. So whenever you have sensitive information, simply use them as a secret. But obviously, this object is only available to the workflow components, and not your personal scripts. This means that you have two ways of passing these variables to your scripts: command-line flags or, as I did, environment variables. This way electron-builder and custom scripts can access them using the process.env-object, e.g. process.env.APPLE_ID.

Using the Artefact Storage

- name: Cache macOS release

if: matrix.os == 'macos-latest'

uses: actions/upload-artifact@v1

with:

name: Zettlr-${{ steps.pkg.outputs.version }}.dmg

path: ./release/Zettlr-${{ steps.pkg.outputs.version }}.dmg

This runs scp behind the scenes and simply uploads the source (path) to the artefact storage and names it with the value of name. Here you can see that the artefact already receives its final name: Zettlr-1.6.0.dmg in this case. Unfortunately, the upload-artifact-action cannot upload multiple files, which explains a lot of the boilerplate code that follows.

After something is uploaded, it is secure, even if the VM shuts down. Everything within one workflow-run can access the same artefacts in the storage.

Preparing a Release

Now we have had three jobs run successfully, and there are six files in the artefact storage. Nevertheless, we still need to generate all checksums for these files, and they should not remain in the artefact storage, but need to be moved to a release draft. This is what the next job, prepare_release is doing.

needs: [build]

This job does not have a strategy, but it needs the job build. In other words: If any of the jobs of build have failed, this job will not be executed. This saves nerves and computing power. Think of the environment! Remember that I mentioned that GitHub Actions will try to run as many jobs in parallel as possible? This is the antidote to that: The needs property keeps the prepare_release-job queued until all build-jobs have finished. The benefits? We know for sure that there will be six artefacts in the storage, which we will now process.

runs-on: [ubuntu-latest]

Let’s be honest: We will always run everything on Linux, except building the NSIS-installer and the DMG-file, so we’ll be using a Linux-VM for this job.

Then, we also need node and the repository-code. Why? Because we need to know how the artefacts are named, and therefore we need the package.json. And, as it is easier to extract something from a JSON-file using a NodeJS script than using shell code, we also need Node and get-pkg-version.js from the repository’s scripts-directory.

- uses: actions/checkout@v2

- name: Setup NodeJS 12.x

uses: actions/setup-node@v1

with:

node-version: '12.x'

- name: Retrieve the current package version

id: pkg

run: |

pkgver=$(node ./scripts/get-pkg-version.js)

echo ::set-output name=version::$pkgver

Next, we need to download all our assets from the artefact store:

- name: Download the Windows asset

uses: actions/download-artifact@v1

with:

name: Zettlr-${{ steps.pkg.outputs.version }}.exe

path: .

This downloads the artefact name simply into the root directory for simplicity. I repeat this six times, as download-artifact also cannot download multiple files at once.

Finally, we need to create a last file and store all SHA256-checksums in it. This again is basic Bash syntax.

- name: Generate SHA256 checksums

run: |

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}.exe" > "SHA256SUMS.txt"

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}.dmg" >> "SHA256SUMS.txt"

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}-amd64.deb" >> "SHA256SUMS.txt"

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}-x86_64.rpm" >> "SHA256SUMS.txt"

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}-i386.AppImage" >> "SHA256SUMS.txt"

sha256sum "Zettlr-${{ steps.pkg.outputs.version }}-x86_64.AppImage" >> "SHA256SUMS.txt"

Now we verify the checksums:

- name: Verify checksums

run: sha256sum -c SHA256SUMS.txt

As everything is done now, let’s create a draft release:

- name: Create a new release draft

id: create_release

uses: actions/create-release@v1

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

with:

tag_name: ${{ steps.pkg.outputs.version }}

release_name: Release ${{ steps.pkg.outputs.version }}

body: If you can read this, we have forgotten to fill in the changelog. Sorry!

draft: true

This creates a new draft release with a nice message that should be overwritten by our Changelog. Please note that tag_name and release_name are both required. In my case, I simply use the version number. Remember, everything can be modified afterwards. Here you can also see that a secret GITHUB_TOKEN is necessary, to give the action the authority to create a release on your behalf. It is generated automatically, so you don’t have to worry about it.

“Why has this step also an ID?” you may ask now. Well, because this is also a step from which we need the output, more precisely: the upload_url-variable. This is where we need to push all our files to, which is the last step of the Workflow:

- name: Upload Windows asset

uses: actions/upload-release-asset@v1.0.1

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

with:

upload_url: ${{ steps.create_release.outputs.upload_url }}

asset_path: ./Zettlr-${{ steps.pkg.outputs.version }}.exe

asset_name: Zettlr-${{ steps.pkg.outputs.version }}.exe

asset_content_type: application/x-msdownload

This step — again — repeats for six times, so that all assets are up and ready. But as there are no new concepts here, there’s no need to go over this again!

Wrapping Up: Continuous Deployment is a Gift

First, sorry for that very long post, but I need to understand concepts first, before applying them. I am bad at simply copying over code I don’t understand (and neither should you! Stackoverflow copying is only permitted if you know what the code does! ;). I assume there are other people whose brain works similarly, so I hope I could help by explaining this. Let’s finish up with a short wrap up:

- Do you do certain tasks over and over again? Create a workflow for them!

- Do you sometimes have to do something repeatedly with minor differences? Create matrix jobs for them! You don’t have to use matrices to run jobs on different machines. Remember, a matrix is nothing else than a simple

for-loop. - You don’t have to clone your repository in a workflow. There are other possible workflows that simply label new issues or pull requests!

- As a very simple analogy: Imagine GitHub workflows as cronjobs. You could simply create one shell-script per workflow and run that one instead.

- Need Electron code signing or other additional steps? Simply add new steps at the correct positions!

May this guide help to accelerate development of Open Source software!